Introducing Chip Benchmark: Hardware-Centric Performance Insights for AI Workloads

As the AI hardware ecosystem rapidly expands, choosing the right accelerator has become increasingly complex. We're excited to introduce Chip Benchmark, an open-source benchmarking suite purpose-built to evaluate the performance of open-weight LLMs across diverse hardware platforms.

As the AI hardware ecosystem rapidly expands, choosing the right accelerator for a given workload has become increasingly complex. Different chips excel in different scenarios — but making apples-to-apples comparisons remains difficult without standardized, open tooling.

We're excited to introduce Chip Benchmark, an open-source benchmarking suite purpose-built to evaluate the performance of open-weight LLMs across diverse hardware platforms. Chip Benchmark supports NVIDIA A100/H100/L40S and AMD MI300X — with upcoming plans to include other hardware vendors and models.

Built for Transparency

We built Chip Benchmark for reproducibility and easy comparison. With an open-source scripting available here, it runs standardized tests across different hardware, logging results in both human and machine-readable formats.

It measures key metrics like throughput, latency, and time-to-first-token across a range of sequence lengths and concurrency levels. Results are organized by model, hardware, and precision for clear, system-level insights.

Dashboard Insights

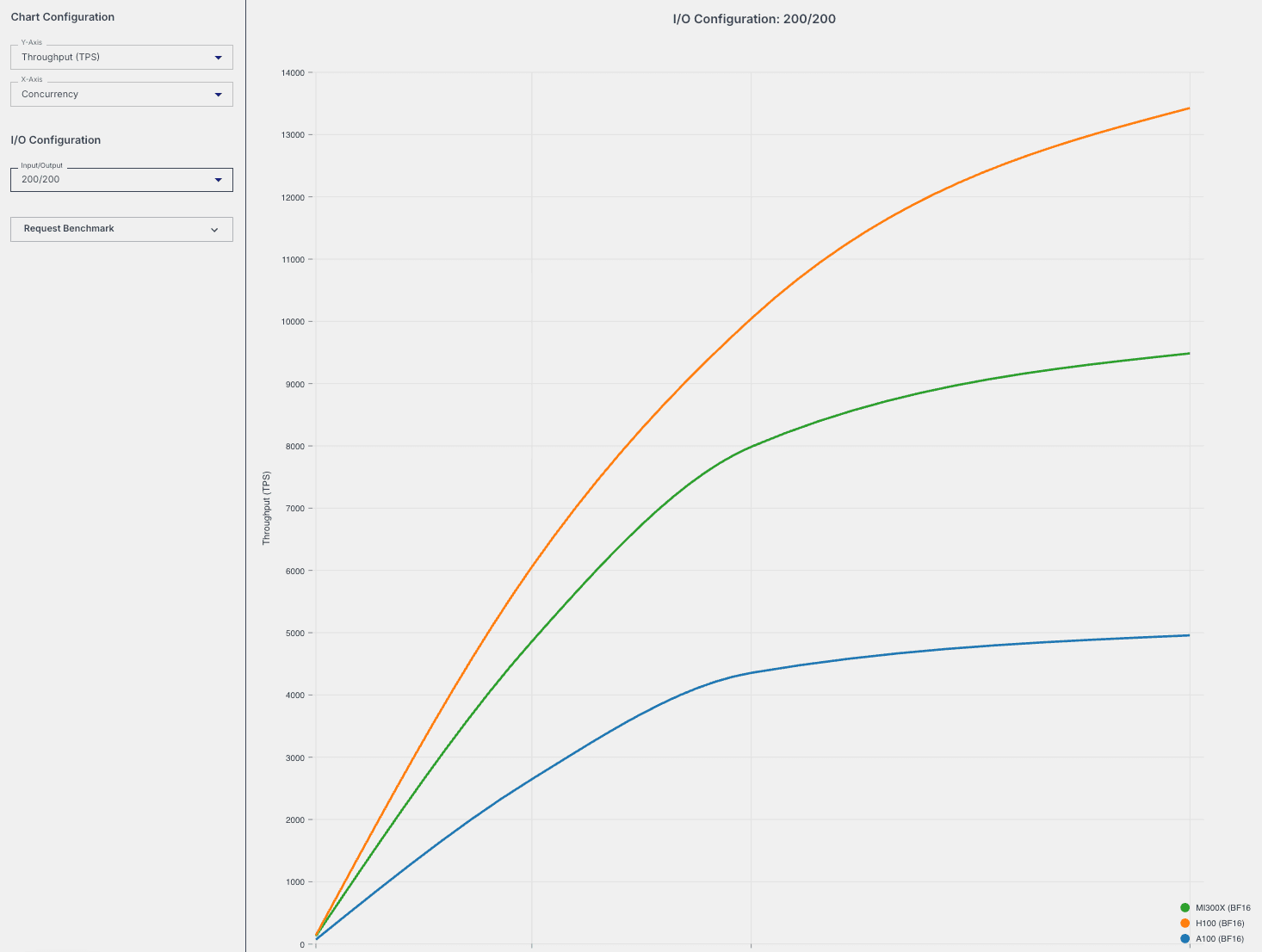

Alongside the benchmarking scripts, we offer an interactive web-based dashboard to visualize results. Users can filter by model, hardware, and precision, and view detailed throughput and latency comparisons.

In the example shown, throughput curves for Llama-3.1-8B-Instruct reveal that while both the H100 and MI300X scale with concurrency, the H100 demonstrates stronger throughput at higher levels. This kind of insight is critical for informed hardware selection, especially at deployment scale.

Key Features

Chip Benchmark provides comprehensive evaluation capabilities designed for both researchers and practitioners:

Multi-Hardware Support

Out of the box, Chip Benchmark supports NVIDIA's A100, H100, and L40S GPUs, as well as AMD's MI300X. The modular architecture makes it straightforward to add support for additional hardware platforms.

Comprehensive Metrics

Beyond simple throughput measurements, Chip Benchmark captures detailed performance characteristics including latency distributions, memory utilization patterns, power consumption (where available), and cost-efficiency metrics.

Real-World Scenarios

The benchmark suite includes tests for various deployment scenarios: single-request latency optimization, high-throughput batch processing, long-context document processing, and multi-turn conversational interactions.

Open Source and Community-Driven

Chip Benchmark is released under an open-source license, encouraging community contributions and ensuring transparency in testing methodologies. We believe that open, reproducible benchmarks are essential for advancing the state of AI infrastructure.

Getting Started

The Chip Benchmark suite is available on GitHub with comprehensive documentation and quick-start guides. Whether you're evaluating hardware for a new deployment or optimizing existing infrastructure, Chip Benchmark provides the insights you need to make informed decisions.

Looking Ahead

As the AI hardware landscape continues to evolve, so will Chip Benchmark. Our roadmap includes support for additional hardware platforms, more sophisticated workload scenarios, and integration with popular MLOps tools. We're committed to maintaining Chip Benchmark as the go-to resource for hardware-centric AI performance evaluation.

Join the Community

We invite researchers, engineers, and hardware vendors to contribute to Chip Benchmark. Whether it's adding support for new hardware, improving testing methodologies, or sharing performance results, your contributions help build a more transparent and efficient AI ecosystem.

Get Involved

We welcome contributors, hardware vendors, and researchers. Find the repository here and let's benchmark the future - together.

Want to see a specific benchmark? Request a benchmark or sign up for notifications (hit the bell icon in the top right) to stay updated as we add new hardware and results!